Stressklinik

Overview

-

Sectors Bio Technology

-

Posted Jobs 0

-

Viewed 41

Company Description

Applied aI Tools

)

AI keeps getting less expensive with every passing day!

Just a couple of weeks back we had the DeepSeek V3 model pushing NVIDIA’s stock into a down spiral. Well, today we have this new expense effective model launched. At this rate of development, I am thinking of selling off NVIDIA stocks lol.

Developed by scientists at Stanford and the University of Washington, their S1 AI model was trained for mere $50.

Yes – only $50.

This further obstacles the dominance of multi-million-dollar designs like OpenAI’s o1, DeepSeek’s R1, and others.

This development highlights how innovation in AI no longer requires huge budget plans, potentially democratizing access to advanced thinking capabilities.

Below, we explore s1’s advancement, advantages, and ramifications for the AI engineering market.

Here’s the initial paper for your referral – s1: Simple test-time scaling

How s1 was built: Breaking down the methodology

It is really interesting to learn how researchers across the world are optimizing with minimal resources to lower expenses. And these efforts are working too.

I have tried to keep it basic and jargon-free to make it simple to understand, keep reading!

Knowledge distillation: The secret sauce

The s1 design uses a strategy called knowledge distillation.

Here, a smaller AI design simulates the reasoning procedures of a larger, more sophisticated one.

Researchers trained s1 using outputs from Google’s Gemini 2.0 Flash Thinking Experimental, a reasoning-focused model available via Google AI Studio. The group prevented resource-heavy techniques like reinforcement knowing. They utilized supervised fine-tuning (SFT) on a dataset of simply 1,000 curated concerns. These questions were paired with Gemini’s responses and detailed reasoning.

What is monitored fine-tuning (SFT)?

Supervised Fine-Tuning (SFT) is an artificial intelligence method. It is used to adjust a pre-trained Large Language Model (LLM) to a particular task. For this process, it utilizes labeled data, where each information point is identified with the correct output.

Adopting uniqueness in training has numerous advantages:

– SFT can enhance a model’s performance on specific jobs

– Improves data effectiveness

– Saves resources compared to training from scratch

– Allows for customization

– Improve a design’s ability to manage edge cases and control its behavior.

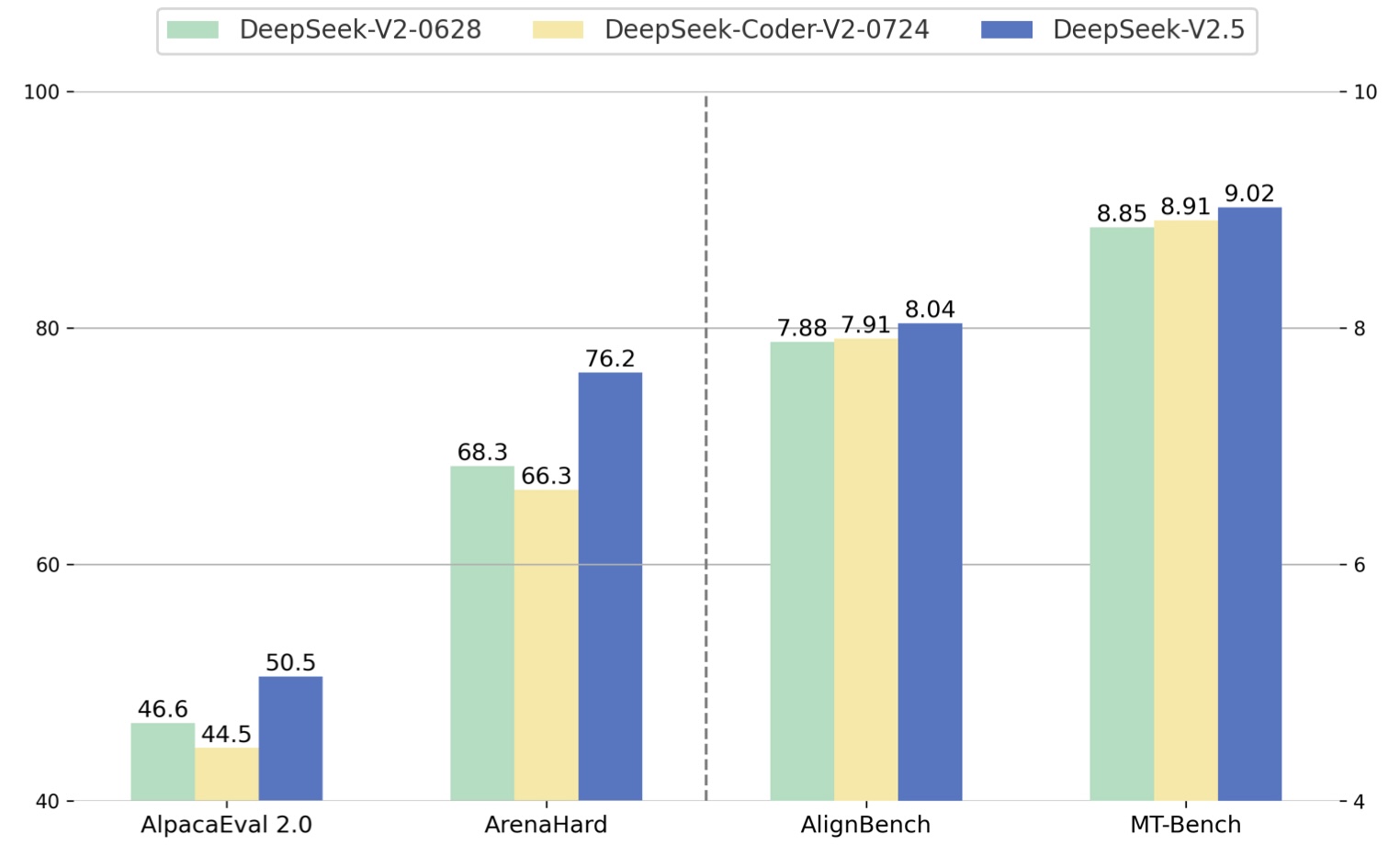

This technique allowed s1 to replicate Gemini’s analytical techniques at a portion of the cost. For contrast, DeepSeek’s R1 design, designed to match OpenAI’s o1, apparently required pricey support learning pipelines.

Cost and calculate efficiency

Training s1 took under 30 minutes 16 NVIDIA H100 GPUs. This cost researchers roughly $20-$ 50 in cloud calculate credits!

By contrast, OpenAI’s o1 and comparable models require countless dollars in calculate resources. The base model for s1 was an off-the-shelf AI from Alibaba’s Qwen, freely available on GitHub.

Here are some significant aspects to think about that aided with attaining this cost efficiency:

Low-cost training: The s1 design attained impressive results with less than $50 in cloud computing credits! Niklas Muennighoff is a Stanford researcher included in the project. He estimated that the needed compute power could be easily rented for around $20. This showcases the task’s incredible affordability and availability.

Minimal Resources: disgaeawiki.info The team utilized an off-the-shelf base model. They fine-tuned it through distillation. They extracted thinking abilities from Google’s Gemini 2.0 Flash Thinking Experimental.

Small Dataset: The s1 design was trained utilizing a small dataset of simply 1,000 curated questions and answers. It consisted of the thinking behind each answer from Google’s Gemini 2.0.

Quick Training Time: The model was trained in less than 30 minutes utilizing 16 Nvidia H100 GPUs.

Ablation Experiments: The low expense permitted researchers to run numerous ablation experiments. They made little variations in setup to discover what works best. For example, they measured whether the design must use ‘Wait’ and not ‘Hmm’.

Availability: The development of s1 provides an alternative to high-cost AI designs like OpenAI’s o1. This development brings the potential for powerful reasoning designs to a broader audience. The code, bytes-the-dust.com data, and training are available on GitHub.

These factors challenge the notion that massive investment is always essential for producing capable AI models. They equalize AI development, allowing smaller teams with limited resources to attain significant outcomes.

The ‘Wait’ Trick

A clever development in s1’s design involves including the word “wait” throughout its thinking process.

This basic timely extension forces the design to stop briefly and confirm its responses, enhancing accuracy without additional training.

The ‘Wait’ Trick is an example of how careful timely engineering can significantly improve AI design efficiency. This improvement does not rely exclusively on increasing design size or training data.

Learn more about composing prompt – Why Structuring or Formatting Is Crucial In Prompt Engineering?

Advantages of s1 over market leading AI designs

Let’s understand why this development is necessary for the AI engineering industry:

1. Cost availability

OpenAI, Google, and Meta invest billions in AI facilities. However, s1 proves that high-performance reasoning designs can be built with very little resources.

For instance:

OpenAI’s o1: Developed using exclusive methods and expensive calculate.

DeepSeek’s R1: iuridictum.pecina.cz Relied on massive support knowing.

s1: Attained equivalent outcomes for under $50 using distillation and SFT.

2. Open-source openness

s1’s code, training information, and model weights are publicly available on GitHub, unlike closed-source models like o1 or Claude. This transparency promotes community partnership and scope of audits.

3. Performance on criteria

In tests measuring mathematical problem-solving and coding jobs, s1 matched the efficiency of leading models like o1. It likewise neared the performance of R1. For instance:

– The s1 design surpassed OpenAI’s o1-preview by as much as 27% on competition math questions from MATH and AIME24 datasets

– GSM8K (math thinking): s1 scored within 5% of o1.

– HumanEval (coding): s1 attained ~ 70% accuracy, comparable to R1.

– An essential function of S1 is its use of test-time scaling, which enhances its precision beyond initial abilities. For example, it increased from 50% to 57% on AIME24 issues utilizing this technique.

s1 doesn’t surpass GPT-4 or Claude-v1 in raw capability. These designs excel in specialized domains like medical oncology.

While distillation methods can duplicate existing designs, some professionals note they might not result in advancement developments in AI efficiency

Still, its cost-to-performance ratio is unrivaled!

s1 is challenging the status quo

What does the development of s1 mean for the world?

Commoditization of AI Models

s1’s success raises existential concerns for AI giants.

If a small group can duplicate cutting-edge thinking for $50, what differentiates a $100 million design? This threatens the “moat” of exclusive AI systems, pushing companies to innovate beyond distillation.

Legal and ethical issues

OpenAI has earlier accused rivals like DeepSeek of improperly harvesting data via API calls. But, s1 sidesteps this concern by utilizing Google’s Gemini 2.0 within its terms of service, which permits non-commercial research study.

Shifting power dynamics

s1 exhibits the “democratization of AI“, allowing startups and scientists to contend with tech giants. Projects like Meta’s LLaMA (which requires expensive fine-tuning) now face pressure from more affordable, purpose-built alternatives.

The constraints of s1 design and future instructions in AI engineering

Not all is finest with s1 in the meantime, and it is not right to anticipate so with limited resources. Here’s the s1 design constraints you need to know before adopting:

Scope of Reasoning

s1 excels in jobs with clear detailed logic (e.g., mathematics problems) however deals with open-ended imagination or nuanced context. This mirrors constraints seen in designs like LLaMA and PaLM 2.

Dependency on parent designs

As a distilled model, s1‘s abilities are naturally bounded by Gemini 2.0’s knowledge. It can not go beyond the original model’s thinking, unlike OpenAI’s o1, which was trained from scratch.

Scalability concerns

While s1 shows “test-time scaling” (extending its reasoning steps), real innovation-like GPT-4‘s leap over GPT-3.5-still requires massive compute budget plans.

What next from here?

The s1 experiment underscores two essential patterns:

Distillation is equalizing AI: Small groups can now replicate high-end abilities!

The worth shift: Future competitors may fixate information quality and distinct architectures, not simply compute scale.

Meta, Google, and annunciogratis.net Microsoft are investing over $100 billion in AI infrastructure. Open-source projects like s1 might require a rebalancing. This change would enable innovation to prosper at both the grassroots and corporate levels.

s1 isn’t a replacement for industry-leading models, but it’s a wake-up call.

By slashing expenses and opening gain access to, it challenges the AI ecosystem to prioritize performance and inclusivity.

Whether this causes a wave of low-priced competitors or tighter constraints from tech giants remains to be seen. Something is clear: the age of “bigger is better” in AI is being redefined.

Have you tried the s1 model?

The world is moving quickly with AI engineering improvements – and this is now a matter of days, not months.

I will keep covering the current AI designs for you all to attempt. One need to discover the optimizations made to decrease costs or innovate. This is really a fascinating area which I am enjoying to compose about.

If there is any issue, correction, or doubt, please comment. I would enjoy to repair it or clear any doubt you have.

At Applied AI Tools, we want to make learning available. You can discover how to use the numerous available AI software application for your personal and professional usage. If you have any concerns – email to content@merrative.com and we will cover them in our guides and blogs.

Discover more about AI principles:

– 2 key insights on the future of software application advancement – Transforming Software Design with AI Agents

– Explore AI Agents – What is OpenAI o3-mini

– Learn what is tree of thoughts triggering approach

– Make the mos of Google Gemini – 6 newest Generative AI tools by Google to improve workplace productivity

– Learn what influencers and professionals consider AI‘s influence on future of work – 15+ Generative AI prices quote on future of work, influence on tasks and workforce productivity

You can register for our newsletter to get notified when we release brand-new guides!

Type your email …

Subscribe

This blog post is composed utilizing resources of Merrative. We are a publishing skill marketplace that assists you create publications and content libraries.

Get in touch if you wish to develop a material library like ours. We focus on the niche of Applied AI, Technology, Artificial Intelligence, or dokuwiki.stream Data Science.